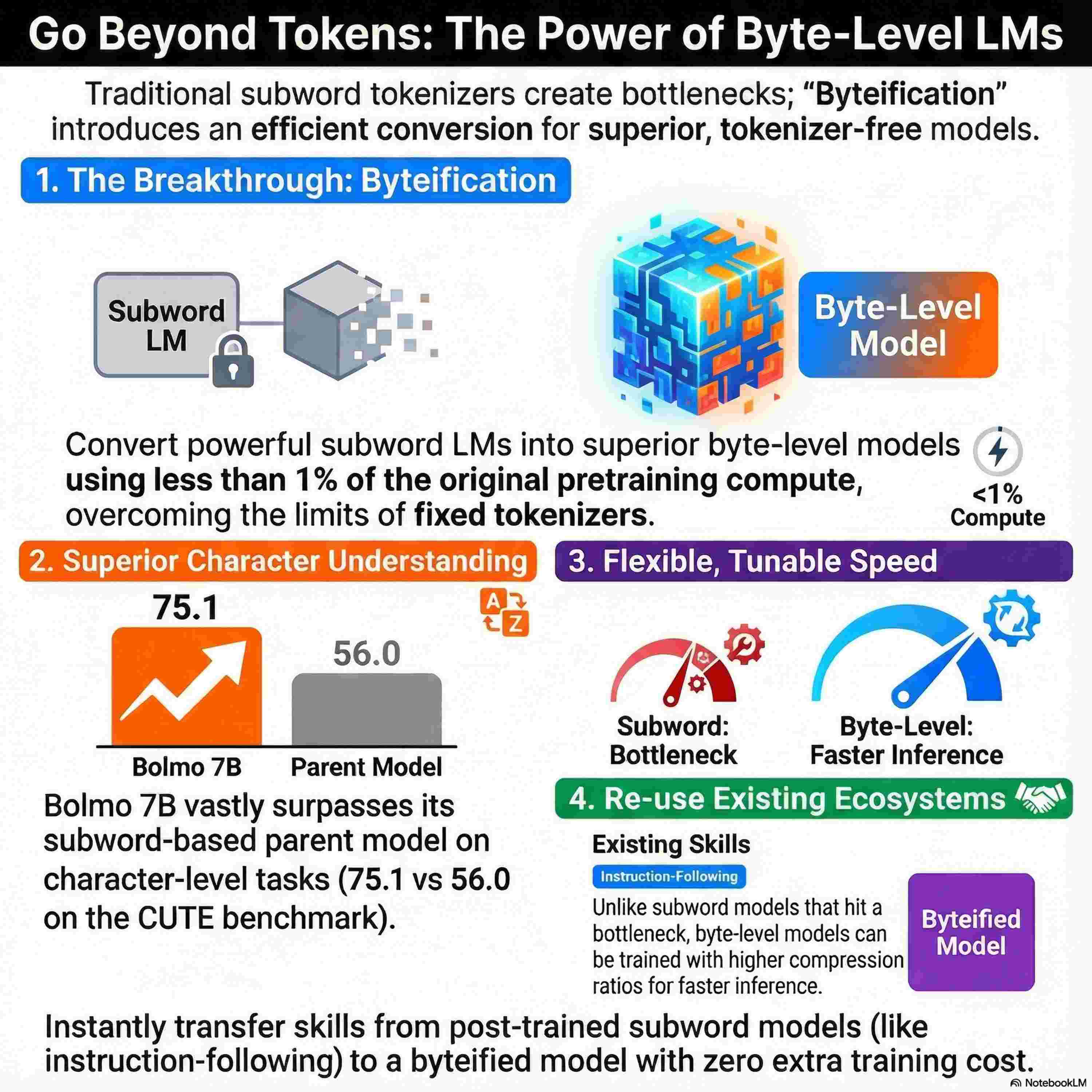

We discuss Bolmo, a groundbreaking family of byte-level language models by AI2 that offers a practical alternative to traditional subword-based tokenization. Developed by the Allen Institute for AI and collaborating universities, these models achieve state-of-the-art performance by "byteifying" existing subword models like OLMo. This innovative process uses a specialized two-stage distillation procedure to convert subword models into byte-level ones using less than 1% of the original pretraining budget. Architecturally, Bolmo features a non-causal boundary predictor and local mLSTM layers to resolve efficiency and character-understanding limitations inherent in previous systems. The research demonstrates that Bolmo effectively matches or exceeds the performance of its source models in coding and character-based tasks. Furthermore, the authors show that Bolmo can be further optimized for speed and easily post-trained using existing subword ecosystems via task arithmetic.